Are you looking for an answer to the topic “python plot feature importance“? We answer all your questions at the website barkmanoil.com in category: Newly updated financial and investment news for you. You will find the answer right below.

Keep Reading

What is a feature importance plot?

Tree based machine learning algorithms such as Random Forest and XGBoost come with a feature importance attribute that outputs an array containing a value between 0 and 100 for each feature representing how useful the model found each feature in trying to predict the target.

How do you show feature important?

- Calculate the mean squared error with the original values.

- Shuffle the values for the features and make predictions.

- Calculate the mean squared error with the shuffled values.

- Compare the difference between them.

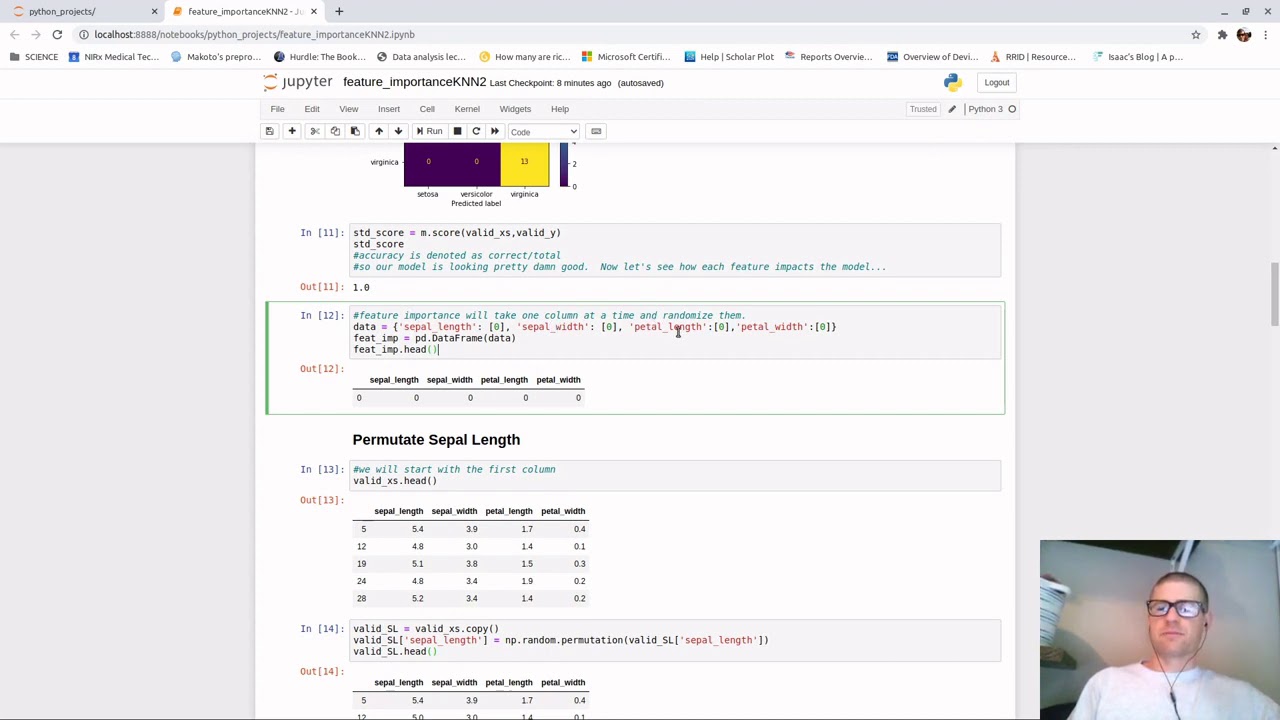

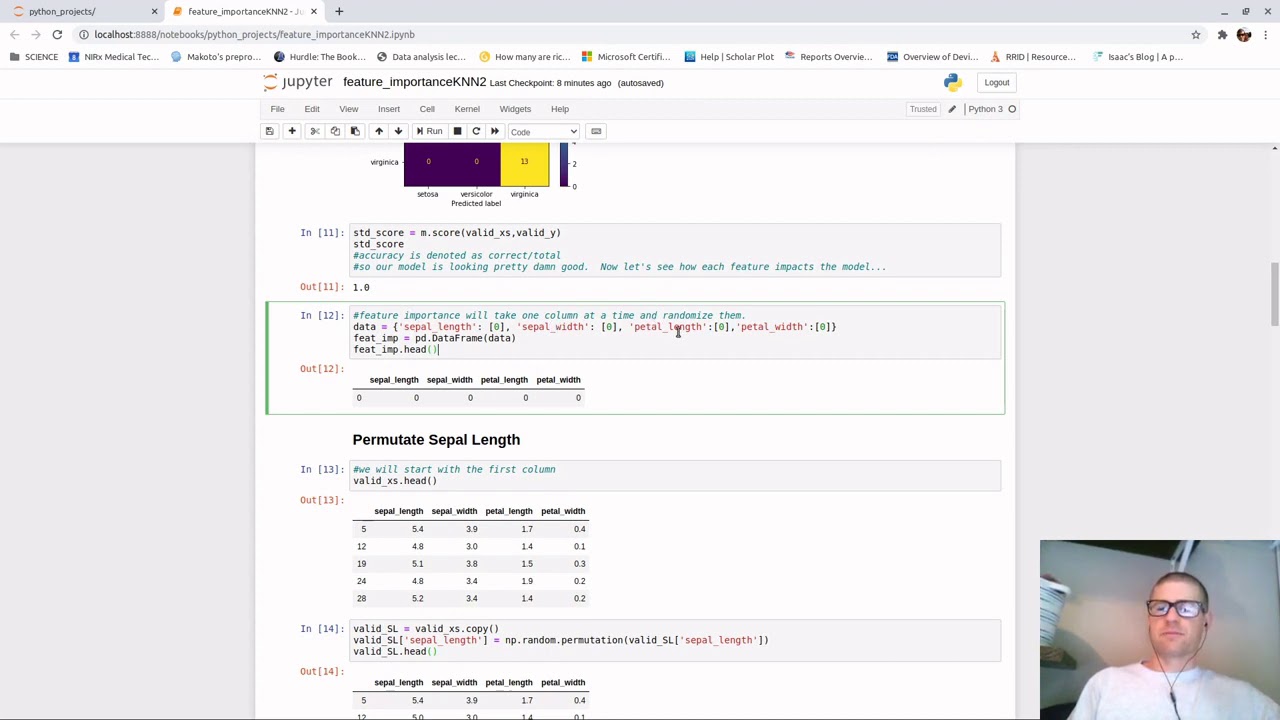

How to find Feature Importance in your model

Images related to the topicHow to find Feature Importance in your model

How do you interpret a feature important in a decision tree?

Feature importance is calculated as the decrease in node impurity weighted by the probability of reaching that node. The node probability can be calculated by the number of samples that reach the node, divided by the total number of samples. The higher the value the more important the feature.

What is feature importance Python?

Feature Importance is a score assigned to the features of a Machine Learning model that defines how “important” is a feature to the model’s prediction. It can help in feature selection and we can get very useful insights about our data.

Does feature importance add up to 1?

Then, the importances are normalized: each feature importance is divided by the total sum of importances. So, in some sense the feature importances of a single tree are percentages. They sum to one and describe how much a single feature contributes to the tree’s total impurity reduction.

Which feature selection method is best?

Exhaustive Feature Selection– Exhaustive feature selection is one of the best feature selection methods, which evaluates each feature set as brute-force. It means this method tries & make each possible combination of features and return the best performing feature set.

How do you Analyse feature important?

The concept is really straightforward: We measure the importance of a feature by calculating the increase in the model’s prediction error after permuting the feature. A feature is “important” if shuffling its values increases the model error, because in this case the model relied on the feature for the prediction.

See some more details on the topic python plot feature importance here:

Plot Feature Importance with feature names – python – Stack …

Quick answer for data scientists that ain’t got no time to waste: Load the feature importances into a pandas series indexed by your column …

Random Forest Feature Importance Plot in Python – AnalyseUp

Tree based machine learning algorithms such as Random Forest and XGBoost come with a feature importance attribute that outputs an array containing a value …

How to Calculate Feature Importance With Python – Machine …

Feature importance refers to techniques that assign a score to input features based on how useful they are at predicting a target variable.

plot feature importance sklearn Code Example – Grepper

“plot feature importance sklearn” Code Answer. random forrest plotting feature importance function. python by Cheerful Cheetah on May 13 2020 Comment.

Is feature important reliable?

It is way more reliable than Linear Models, thus the feature importance is usually much more accurate. P_value test does not consider the relationship between two variables, thus the features with p_value > 0.05 might actually be important and vice versa.

Does decision tree have feature importance?

Decision tree algorithms offer both explainable rules and feature importance values for non-linear models.

Is Hamming distance used for feature importance?

The minimum Hamming distance is used to define some essential notions in coding theory, such as error detecting and error correcting codes. In particular, a code C is said to be k error detecting if, and only if, the minimum Hamming distance between any two of its codewords is at least k+1.

How does random forest gives feature importance?

Random Forest Built-in Feature Importance

It is a set of Decision Trees. Each Decision Tree is a set of internal nodes and leaves. In the internal node, the selected feature is used to make decision how to divide the data set into two separate sets with similars responses within.

What is the difference between features and importance?

As nouns the difference between importance and feature

is that importance is the quality or condition of being important or worthy of note while feature is (obsolete) one’s structure or make-up; form, shape, bodily proportions.

What is the difference between feature selection and feature importance?

Thus, feature selection and feature importance sometimes share the same technique but feature selection is mostly applied before or during model training to select the principal features of the final input data, while feature importance measures are used during or after training to explain the learned model.

Feature Importance In Decision Tree | Sklearn | Scikit Learn | Python | Machine Learning | Codegnan

Images related to the topicFeature Importance In Decision Tree | Sklearn | Scikit Learn | Python | Machine Learning | Codegnan

Is Scikit learning important?

Scikit-learn is an indispensable part of the Python machine learning toolkit at JPMorgan. It is very widely used across all parts of the bank for classification, predictive analytics, and very many other machine learning tasks.

What is F score in feature importance?

In other words, F-score reveals the discriminative power of each feature independently from others. One score is computed for the first feature, and another score is computed for the second feature. But it does not indicate anything on the combination of both features (mutual information).

Is random forest easy to interpret?

Decision trees are much easier to interpret and understand. Since a random forest combines multiple decision trees, it becomes more difficult to interpret. Here’s the good news – it’s not impossible to interpret a random forest.

Is feature important in ML?

Feature importance refers to a class of techniques for assigning scores to input features to a predictive model that indicates the relative importance of each feature when making a prediction.

How do we select important features from a dataset?

- Chi-square Test. …

- Fisher’s Score. …

- Correlation Coefficient. …

- Dispersion ratio. …

- Backward Feature Elimination. …

- Recursive Feature Elimination. …

- Random Forest Importance.

When should I do feature selection?

The aim of feature selection is to maximize relevance and minimize redundancy. Feature selection methods can be used in data pre-processing to achieve efficient data reduction. This is useful for finding accurate data models.

What are the three types of feature selection methods?

There are three types of feature selection: Wrapper methods (forward, backward, and stepwise selection), Filter methods (ANOVA, Pearson correlation, variance thresholding), and Embedded methods (Lasso, Ridge, Decision Tree).

How does Xgboost calculate feature important?

…

The are 3 ways to compute the feature importance for the Xgboost:

- built-in feature importance.

- permutation based importance.

- importance computed with SHAP values.

What is relative feature importance?

Gunnar König, Christoph Molnar, Bernd Bischl, Moritz Grosse-Wentrup. Interpretable Machine Learning (IML) methods are used to gain insight into the relevance of a feature of interest for the performance of a model.

What is permutation feature importance?

The permutation feature importance is defined to be the decrease in a model score when a single feature value is randomly shuffled 1. This procedure breaks the relationship between the feature and the target, thus the drop in the model score is indicative of how much the model depends on the feature.

What is feature importance random forest?

June 29, 2020 by Piotr Płoński Random forest. The feature importance (variable importance) describes which features are relevant. It can help with better understanding of the solved problem and sometimes lead to model improvements by employing the feature selection.

How do you get a feature important in Knn?

If you are set on using KNN though, then the best way to estimate feature importance is by taking the sample to predict on, and computing its distance from each of its nearest neighbors for each feature (call these neighb_dist ).

SHAP – What Is Your Model Telling You? Interpret CatBoost Regression and Classification Outputs

Images related to the topicSHAP – What Is Your Model Telling You? Interpret CatBoost Regression and Classification Outputs

How do you get feature important in Catboost?

To get this feature importance, catboost simply takes the difference between the metric (Loss function) obtained using the model in normal scenario (when we include the feature) and model without this feature (model is built approximately using the original model with this feature removed from all the trees in the …

What is Gini importance?

It is sometimes called “gini importance” or “mean decrease impurity” and is defined as the total decrease in node impurity (weighted by the probability of reaching that node (which is approximated by the proportion of samples reaching that node)) averaged over all trees of the ensemble.

Related searches to python plot feature importance

- python lightgbm plot feature importance

- feature importance svm

- plot feature importance logistic regression python

- catboost feature importance

- plot feature importance xgboost python

- plot feature importance random forest python

- Feature importance Random Forest

- lightgbm python plot feature importance

- feature importance plot

- Feature importance SVM

- Features importance python

- feature importance xgboost

- feature importance random forest

- python xgboost plot feature importance

- features importance python

- Coefficients as feature importance

- coefficients as feature importance

- Feature importance seaborn

- python random forest plot feature importance

- Feature importance XGBoost

- python sklearn plot feature importance

- feature importance seaborn

- shap feature importance plot

Information related to the topic python plot feature importance

Here are the search results of the thread python plot feature importance from Bing. You can read more if you want.

You have just come across an article on the topic python plot feature importance. If you found this article useful, please share it. Thank you very much.