Are you looking for an answer to the topic “shape of spark dataframe“? We answer all your questions at the website barkmanoil.com in category: Newly updated financial and investment news for you. You will find the answer right below.

Keep Reading

How do you find the shape of a Spark in a DataFrame?

Similar to Python Pandas you can get the Size and Shape of the PySpark (Spark with Python) DataFrame by running count() action to get the number of rows on DataFrame and len(df. columns()) to get the number of columns.

What is the shape of a DataFrame?

shape. The shape attribute of pandas. DataFrame stores the number of rows and columns as a tuple (number of rows, number of columns) .

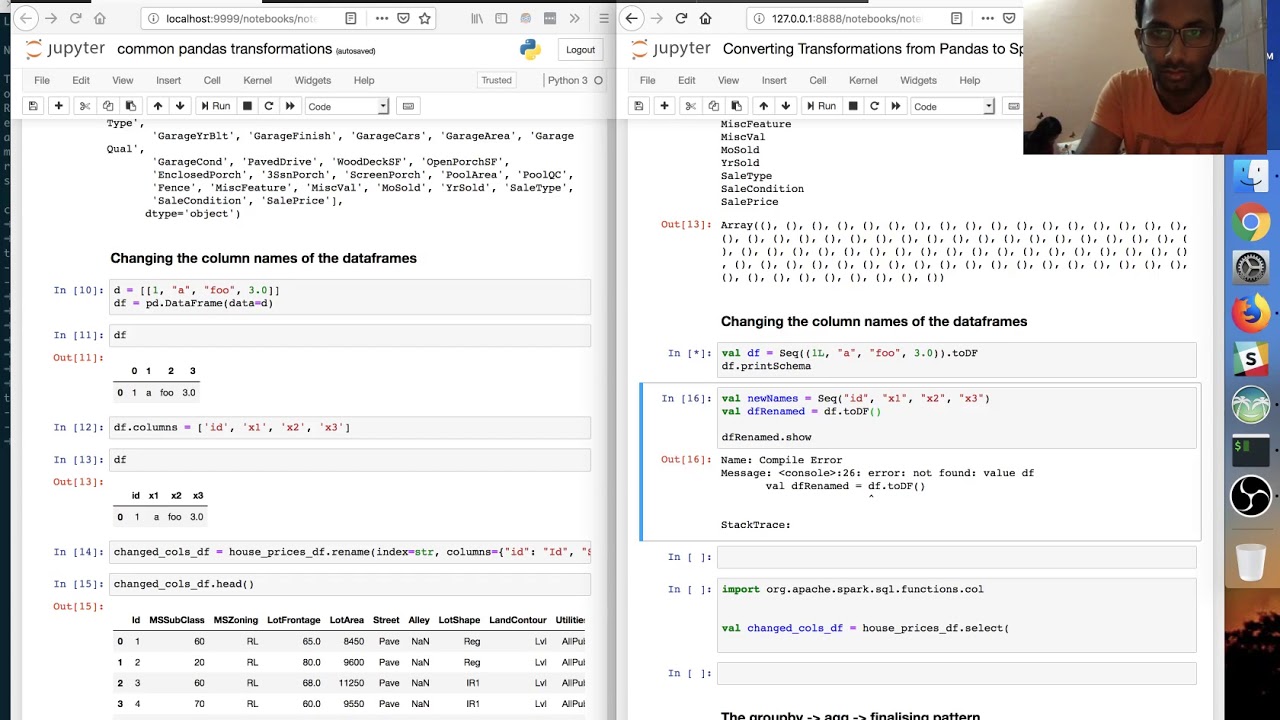

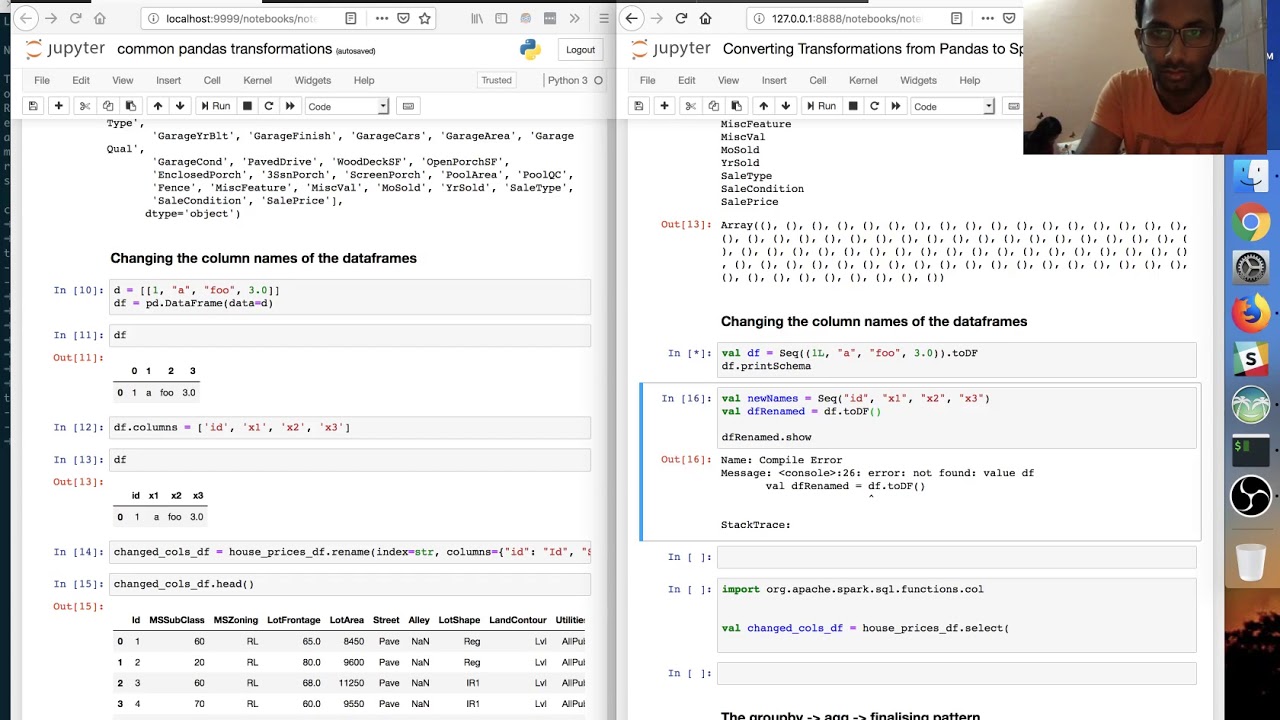

Spark Dataframe Shape

Images related to the topicSpark Dataframe Shape

What is Spark DataFrame & its features?

A Spark DataFrame is an integrated data structure with an easy-to-use API for simplifying distributed big data processing. DataFrame is available for general-purpose programming languages such as Java, Python, and Scala.

What is the difference between Spark DataFrame and Pandas DataFrame?

…

Table of Difference between Spark DataFrame and Pandas DataFrame:

| Spark DataFrame | Pandas DataFrame |

|---|---|

| Spark DataFrame has Multiple Nodes. | Pandas DataFrame has a Single Node. |

How do I get the size of a file in PySpark?

- use org.apache.spark.util.SizeEstimator.

- use df.inputfiles() and use an other API to get the file size directly (I did so using Hadoop Filesystem API (How to get file size). Not that only works if the dataframe was not fitered/aggregated.

How do I determine the size of a table in Databricks?

You can determine the size of a non-delta table by calculating the total sum of the individual files within the underlying directory. You can also use queryExecution. analyzed. stats to return the size.

What is shape and size of Dataframe?

Size and shape of a dataframe in pandas python: Size of a dataframe is the number of fields in the dataframe which is nothing but number of rows * number of columns. Shape of a dataframe gets the number of rows and number of columns of the dataframe.

See some more details on the topic shape of spark dataframe here:

PySpark Get the Size or Shape of a DataFrame – Spark by …

Spark DataFrame doesn’t have a method shape() to return the size of the rows and columns of the DataFrame however, you can achieve this by getting PySpark …

pyspark.pandas.DataFrame.shape – Apache Spark

DataFrame.shape¶. property DataFrame. shape ¶. Return a tuple representing the dimensionality of the DataFrame. Examples.

How to find the size or shape of a … – Databricks Community

Spark Notebook to import data into Excel. Databricks SQLCBullMarch 18, 2022 at 3:50 PM. Number of Views 9 Number of Upvotes 0 Number of Comments 0.

How do you find spark dataframe shape pyspark ( With Code ) ?

We can get spark dataframe shape pyspark differently for row and column. We can use count() function for rows and len(df.columns()) for columns.

What is the size of a Dataframe?

The long answer is the size limit for pandas DataFrames is 100 gigabytes (GB) of memory instead of a set number of cells.

How do you find the dimensions of a data frame?

In Python Pandas, the dataframe. size property is used to display the size of Pandas DataFrame . It returns the size of the dataframe or a series which is equivalent to the total number of elements. If you want to calculate the size of the series , it will return the number of rows.

Why do we use data frames in Spark?

We can say that DataFrames are relational databases with better optimization techniques. Spark DataFrames can be created from various sources, such as Hive tables, log tables, external databases, or the existing RDDs. DataFrames allow the processing of huge amounts of data.

Pandas shape Python tutorial: pandas.Dataframe.shape

Images related to the topicPandas shape Python tutorial: pandas.Dataframe.shape

Are Spark DataFrames immutable?

While Pyspark derives its basic data types from Python, its own data structures are limited to RDD, Dataframes, Graphframes. These data frames are immutable and offer reduced flexibility during row/column level handling, as compared to Python.

Are Spark DataFrames in memory?

Spark DataFrame or Dataset cache() method by default saves it to storage level ` MEMORY_AND_DISK ` because recomputing the in-memory columnar representation of the underlying table is expensive. Note that this is different from the default cache level of ` RDD.

Why is PySpark better than Pandas?

Due to parallel execution on all cores on multiple machines, PySpark runs operations faster than Pandas, hence we often required to covert Pandas DataFrame to PySpark (Spark with Python) for better performance. This is one of the major differences between Pandas vs PySpark DataFrame.

What is PySpark DataFrame?

PySpark DataFrames are tables that consist of rows and columns of data. It has a two-dimensional structure wherein every column consists of values of a particular variable while each row consists of a single set of values from each column.

Is Spark and PySpark different?

Apache Spark is written in Scala programming language. PySpark has been released in order to support the collaboration of Apache Spark and Python, it actually is a Python API for Spark. In addition, PySpark, helps you interface with Resilient Distributed Datasets (RDDs) in Apache Spark and Python programming language.

How do I find the number of rows in a spark data frame?

- count(): This function is used to extract number of rows from the Dataframe.

- distinct(). …

- columns(): This function is used to extract the list of columns names present in the Dataframe.

- len(df.

What is explode in PySpark?

PYSPARK EXPLODE is an Explode function that is used in the PySpark data model to explode an array or map-related columns to row in PySpark. It explodes the columns and separates them not a new row in PySpark. It returns a new row for each element in an array or map.

How do you get the number of rows in a Dataframe in PySpark?

count() function counts the number of rows of dataframe.

What is spark SQL?

Spark SQL is a Spark module for structured data processing. It provides a programming abstraction called DataFrames and can also act as a distributed SQL query engine. It enables unmodified Hadoop Hive queries to run up to 100x faster on existing deployments and data.

Modern Spark DataFrame Dataset | Apache Spark 2.0 Tutorial

Images related to the topicModern Spark DataFrame Dataset | Apache Spark 2.0 Tutorial

What is data shape in Python?

shape is a tuple that always gives dimensions of the array. The shape function is a tuple that gives you an arrangement of the number of dimensions in the array. If Y has w rows and z columns, then Y. shape is (w,z).

Which function of the DataFrame gives us its size?

ndim are used to return size, shape and dimensions of data frames and series. Return : Returns size of dataframe/series which is equivalent to total number of elements. That is rows x columns.

Related searches to shape of spark dataframe

- Spark dataframe count rows

- Count pyspark

- f size pyspark

- get shape of spark dataframe

- Get n rows from dataframe pyspark

- get n rows from dataframe pyspark

- shape of spark df

- Check size of dataframe spark

- F size pyspark

- check size of dataframe spark

- count pyspark

- pyspark show

- how to get shape of spark dataframe

- spark dataframe count rows

- filter pyspark

- concat dataframe pyspark

- Pyspark show

- find shape of spark dataframe

Information related to the topic shape of spark dataframe

Here are the search results of the thread shape of spark dataframe from Bing. You can read more if you want.

You have just come across an article on the topic shape of spark dataframe. If you found this article useful, please share it. Thank you very much.